Abstract

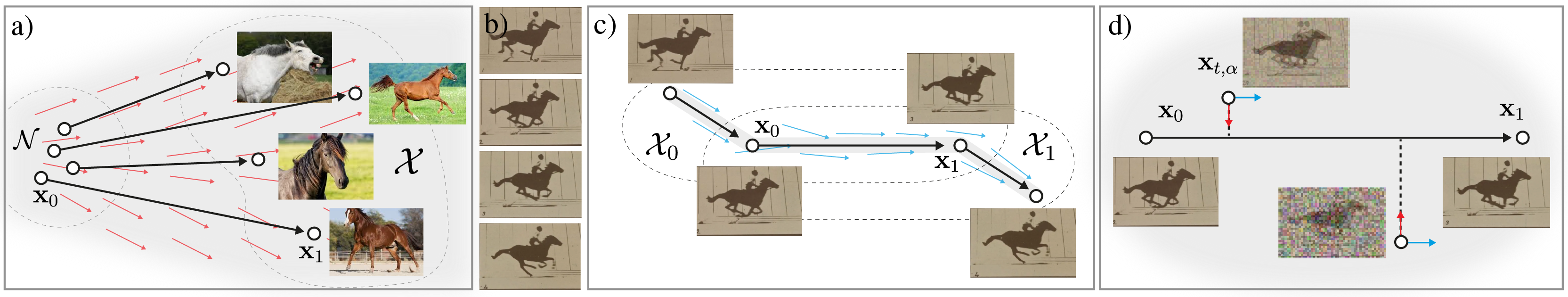

We propose a novel generative video model to robustly learn temporal change as a neural Ordinary Differential Equation (ODE) flow with a bilinear objective which combines two aspects: The first is to map from the past into future video frames directly. Previous work has mapped the noise to new frames, a more computationally expensive process. Unfortunately, starting from the previous frame, instead of noise, is more prone to drifting errors. Hence, second, we additionally learn how to remove the accumulated errors as the joint objective by adding noise during training. We demonstrate unconditional video generation in a streaming manner for various video datasets, all at competitive quality compared to a conditional diffusion baseline but with higher speed, i.e., fewer ODE solver steps.

Motivation

Can videos, as naturally high-dimensional time-series signals, be effectively modeled using neural ODEs?

There has been constantly attempts but also persistent challenges including training efficiency, sample realism, and error accumulation. Our method is inspired by flow matching to address all these.

Method

The core of our method, for both training and sampling, can be summarized as following Python pseudocode.

'''

Training (one epoch)

Inputs: consecutive_frames (data)

Outputs: Model (learned bi-flow)

'''

# Iterate over all data

for x0, x1 in consecutive_frames:

# Bi-linear interpolation

t = uniform_sample(0, 1)

a = uniform_sample(0, 1)

noise = normal_sample()

x_t = x0 + t * (x1 - x0)

x_t_a = x_t + a * noise

# Compute the joint loss

v, d = Model(x_t_a, t, a)

loss = mse(v, x1 - x0) + mse(d, noise)

# Optimize model, e.g., one SGD step

...

'''

Sampling

Inputs: Model (learned bi-flow), x0 (start frame), eps (inference noise level), N (#steps)

Outputs: x1 (next frame)

'''

# Initial value

noise = normal_sample()

x_0_eps = x0 + eps * noise

# Characteristic ODE

t0, t1, a0, a1 = 0, 1, eps, 0

def joint_ode(x_k, k):

tk = t0 + k * (t1 - t0)

ak = a0 + k * (a1 - a0)

v, d = Model(x_k, tk, ak)

return (t1 - t0) * v + (a1 - a0) * d

# Solve forward with Euler solver, for example

dk = 1 / N

k = 0

x_k = x_0_eps

for _ in range(N):

x_k = x_k + dk * joint_ode(x_k, k)

k = k + dk

x1 = xk

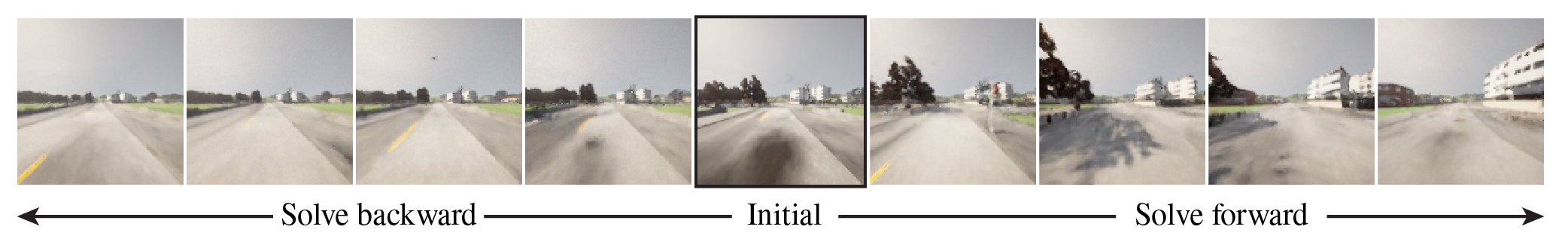

Results

Acknowledgement

We extend our gratitude to reviewers for their effective suggestions. We thank Xingchang Huang for helpful discussions in the early stage of this project. This project is supported by Meta Reality Labs and additionally a bursary to Chen by The Rabin Ezra Trust.

Citation

@inproceedings{liu2025generative,

title={Generative Video Bi-flow},

author={Liu, Chen and Ritschel, Tobias},

booktitle={Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV)},

pages={19363--19372},

year={2025}

}